For AI server deployments, secure HBM inventory now or risk 6+ month delays in 2025.

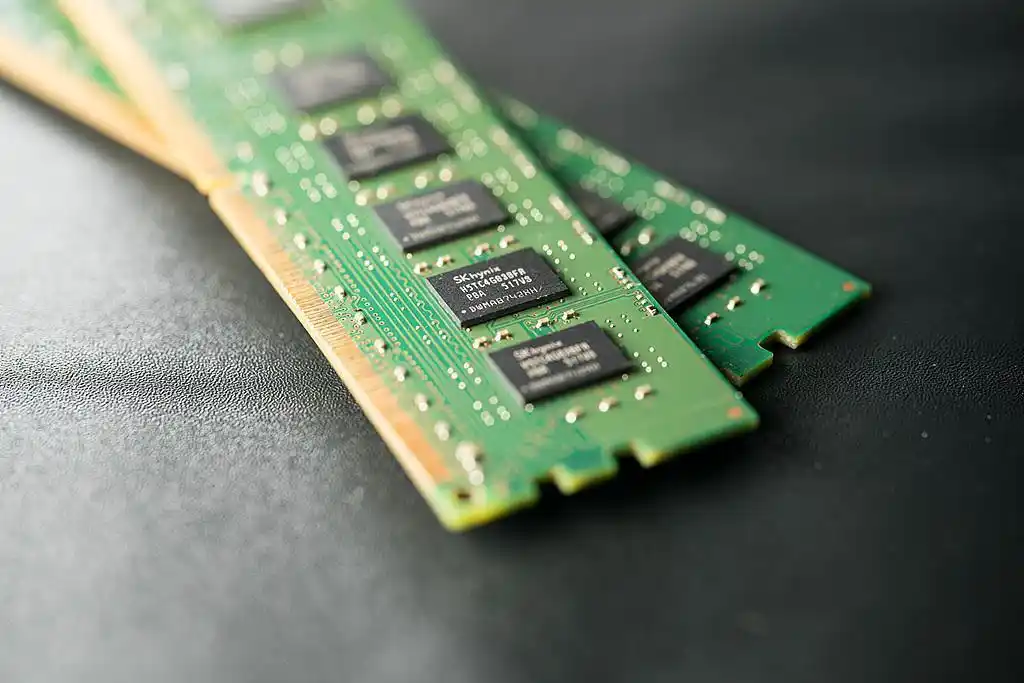

The global memory market is experiencing a significant price increase, driven by a combination of supply constraints and surging demand. Key factors include:

Price Projections (Q3 2024 – Q1 2025):

| Category | Current Increase | Q4 Forecast |

|---|---|---|

| DDR5 RDIMM | +15-20% | +10-15% |

| HBM3/E | +30%+ | +20-25% |

| DDR4 (Enterprise) | +10-12% | Stabilizing |

HBM’s Role in AI Servers:

Production Challenges:

Short-Term (0-6 Months):

Explore Alternatives:

Long-Term (2025):

The memory market will remain volatile through 2025, with HBM demand outstripping supply. Enterprises must: